Get started with Llama 3.1

The current generation of Llama models is 3.3. Please note that while this guide focuses on Llama 3.1, the newer Llama 3.3 models are now available and may offer improved capabilities. The concepts and integration techniques described here remain applicable, though you may want to use the latest generation models for optimal performance.

With the release of Llama 3.1, there has never been a better time to start building AI applications.

The AI SDK is a powerful TypeScript toolkit for building AI application with large language models (LLMs) like Llama 3.1 alongside popular frameworks like React, Next.js, Vue, Svelte, Node.js, and more

Llama 3.1

The release of Meta's Llama 3.1 is an important moment in AI development. As the first state-of-the-art open weight AI model, Llama 3.1 is helping accelerate developers building AI apps. Available in 8B, 70B, and 405B sizes, these instruction-tuned models work well for tasks like dialogue generation, translation, reasoning, and code generation.

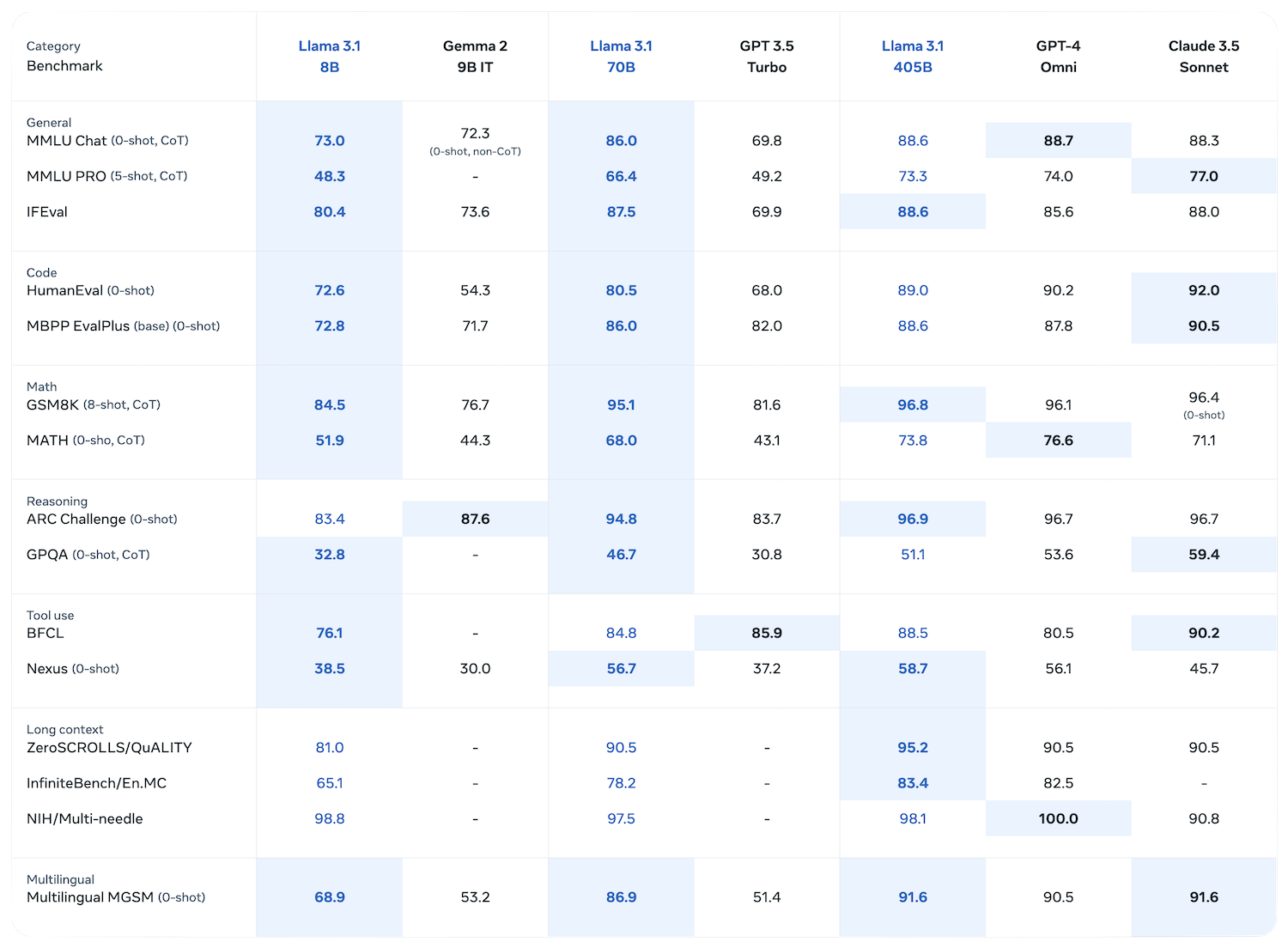

Benchmarks

Llama 3.1 surpasses most available open-source chat models on common industry benchmarks and even outperforms some closed-source models, offering superior performance in language nuances, contextual understanding, and complex multi-step tasks. The models' refined post-training processes significantly improve response alignment, reduce false refusal rates, and enhance answer diversity, making Llama 3.1 a powerful and accessible tool for building generative AI applications.

Source: Meta AI - Llama 3.1 Model Card

Source: Meta AI - Llama 3.1 Model Card

Choosing Model Size

Llama 3.1 includes a new 405B parameter model, becoming the largest open-source model available today. This model is designed to handle the most complex and demanding tasks.

When choosing between the different sizes of Llama 3.1 models (405B, 70B, 8B), consider the trade-off between performance and computational requirements. The 405B model offers the highest accuracy and capability for complex tasks but requires significant computational resources. The 70B model provides a good balance of performance and efficiency for most applications, while the 8B model is suitable for simpler tasks or resource-constrained environments where speed and lower computational overhead are priorities.

Getting Started with the AI SDK

The AI SDK is the TypeScript toolkit designed to help developers build AI-powered applications with React, Next.js, Vue, Svelte, Node.js, and more. Integrating LLMs into applications is complicated and heavily dependent on the specific model provider you use.

The AI SDK abstracts away the differences between model providers, eliminates boilerplate code for building chatbots, and allows you to go beyond text output to generate rich, interactive components.

At the center of the AI SDK is AI SDK Core, which provides a unified API to call any LLM. The code snippet below is all you need to call Llama 3.1 (using DeepInfra) with the AI SDK:

import { generateText } from 'ai';import { deepinfra } from '@ai-sdk/deepinfra';

const { text } = await generateText({ model: deepinfra('meta-llama/Meta-Llama-3.1-405B-Instruct'), prompt: 'What is love?',});Llama 3.1 is available to use with many AI SDK providers including DeepInfra, Amazon Bedrock, Baseten Fireworks, and more.

AI SDK Core abstracts away the differences between model providers, allowing you to focus on building great applications. Prefer to use Amazon Bedrock? The unified interface also means that you can easily switch between models by changing just two lines of code.

import { generateText } from 'ai';import { bedrock } from '@ai-sdk/amazon-bedrock';

const { text } = await generateText({ model: bedrock('meta.llama3-1-405b-instruct-v1'), prompt: 'What is love?',});Streaming the Response

To stream the model's response as it's being generated, update your code snippet to use the streamText function.

import { streamText } from 'ai';import { deepinfra } from '@ai-sdk/deepinfra';

const { textStream } = streamText({ model: deepinfra('meta-llama/Meta-Llama-3.1-405B-Instruct'), prompt: 'What is love?',});Generating Structured Data

While text generation can be useful, you might want to generate structured JSON data. For example, you might want to extract information from text, classify data, or generate synthetic data. AI SDK Core provides two functions (generateObject and streamObject) to generate structured data, allowing you to constrain model outputs to a specific schema.

import { generateObject } from 'ai';import { deepinfra } from '@ai-sdk/deepinfra';import { z } from 'zod';

const { object } = await generateObject({ model: deepinfra('meta-llama/Meta-Llama-3.1-70B-Instruct'), schema: z.object({ recipe: z.object({ name: z.string(), ingredients: z.array(z.object({ name: z.string(), amount: z.string() })), steps: z.array(z.string()), }), }), prompt: 'Generate a lasagna recipe.',});This code snippet will generate a type-safe recipe that conforms to the specified zod schema.

Tools

While LLMs have incredible generation capabilities, they struggle with discrete tasks (e.g. mathematics) and interacting with the outside world (e.g. getting the weather). The solution: tools, which are like programs that you provide to the model, which it can choose to call as necessary.

Using Tools with the AI SDK

The AI SDK supports tool usage across several of its functions, including generateText and streamUI. By passing one or more tools to the tools parameter, you can extend the capabilities of LLMs, allowing them to perform discrete tasks and interact with external systems.

Here's an example of how you can use a tool with the AI SDK and Llama 3.1:

import { generateText, tool } from 'ai';import { deepinfra } from '@ai-sdk/deepinfra';import { getWeather } from './weatherTool';

const { text } = await generateText({ model: deepinfra('meta-llama/Meta-Llama-3.1-70B-Instruct'), prompt: 'What is the weather like today?', tools: { weather: tool({ description: 'Get the weather in a location', parameters: z.object({ location: z.string().describe('The location to get the weather for'), }), execute: async ({ location }) => ({ location, temperature: 72 + Math.floor(Math.random() * 21) - 10, }), }), },});In this example, the getWeather tool allows the model to fetch real-time weather data, enhancing its ability to provide accurate and up-to-date information.

Agents

Agents take your AI applications a step further by allowing models to execute multiple steps (i.e. tools) in a non-deterministic way, making decisions based on context and user input.

Agents use LLMs to choose the next step in a problem-solving process. They can reason at each step and make decisions based on the evolving context.

Implementing Agents with the AI SDK

The AI SDK supports agent implementation through the maxSteps parameter. This allows the model to make multiple decisions and tool calls in a single interaction.

Here's an example of an agent that solves math problems:

import { generateText, tool } from 'ai';import { deepinfra } from '@ai-sdk/deepinfra';import * as mathjs from 'mathjs';import { z } from 'zod';

const problem = 'Calculate the profit for a day if revenue is $5000 and expenses are $3500.';

const { text: answer } = await generateText({ model: deepinfra('meta-llama/Meta-Llama-3.1-70B-Instruct'), system: 'You are solving math problems. Reason step by step. Use the calculator when necessary.', prompt: problem, tools: { calculate: tool({ description: 'A tool for evaluating mathematical expressions.', parameters: z.object({ expression: z.string() }), execute: async ({ expression }) => mathjs.evaluate(expression), }), }, maxSteps: 5,});In this example, the agent can use the calculator tool multiple times if needed, reasoning through the problem step by step.

Building Interactive Interfaces

AI SDK Core can be paired with AI SDK UI, another powerful component of the AI SDK, to streamline the process of building chat, completion, and assistant interfaces with popular frameworks like Next.js, Nuxt, SvelteKit, and SolidStart.

AI SDK UI provides robust abstractions that simplify the complex tasks of managing chat streams and UI updates on the frontend, enabling you to develop dynamic AI-driven interfaces more efficiently.

With four main hooks — useChat, useCompletion, useObject, and useAssistant — you can incorporate real-time chat capabilities, text completions, streamed JSON, and interactive assistant features into your app.

Let's explore building a chatbot with Next.js, the AI SDK, and Llama 3.1 (via DeepInfra):

import { streamText } from 'ai';import { deepinfra } from '@ai-sdk/deepinfra';

// Allow streaming responses up to 30 secondsexport const maxDuration = 30;

export async function POST(req: Request) { const { messages } = await req.json();

const result = streamText({ model: deepinfra('meta-llama/Meta-Llama-3.1-70B-Instruct'), system: 'You are a helpful assistant.', messages, });

return result.toDataStreamResponse();}'use client';

import { useChat } from '@ai-sdk/react';

export default function Page() { const { messages, input, handleInputChange, handleSubmit } = useChat();

return ( <> {messages.map(message => ( <div key={message.id}> {message.role === 'user' ? 'User: ' : 'AI: '} {message.content} </div> ))} <form onSubmit={handleSubmit}> <input name="prompt" value={input} onChange={handleInputChange} /> <button type="submit">Submit</button> </form> </> );}The useChat hook on your root page (app/page.tsx) will make a request to your AI provider endpoint (app/api/chat/route.ts) whenever the user submits a message. The messages are then streamed back in real-time and displayed in the chat UI.

This enables a seamless chat experience where the user can see the AI response as soon as it is available, without having to wait for the entire response to be received.

Going Beyond Text

The AI SDK's React Server Components (RSC) API enables you to create rich, interactive interfaces that go beyond simple text generation. With the streamUI function, you can dynamically stream React components from the server to the client.

Let's dive into how you can leverage tools with AI SDK RSC to build a generative user interface with Next.js (App Router).

First, create a Server Action.

'use server';

import { streamUI } from 'ai/rsc';import { deepinfra } from '@ai-sdk/deepinfra';import { z } from 'zod';

export async function streamComponent() { const result = await streamUI({ model: deepinfra('meta-llama/Meta-Llama-3.1-70B-Instruct'), prompt: 'Get the weather for San Francisco', text: ({ content }) => <div>{content}</div>, tools: { getWeather: { description: 'Get the weather for a location', parameters: z.object({ location: z.string() }), generate: async function* ({ location }) { yield <div>loading...</div>; const weather = '25c'; // await getWeather(location); return ( <div> the weather in {location} is {weather}. </div> ); }, }, }, }); return result.value;}In this example, if the model decides to use the getWeather tool, it will first yield a div while fetching the weather data, then return a weather component with the fetched data (note: static data in this example). This allows for a more dynamic and responsive UI that can adapt based on the AI's decisions and external data.

On the frontend, you can call this Server Action like any other asynchronous function in your application. In this case, the function returns a regular React component.

'use client';

import { useState } from 'react';import { streamComponent } from './actions';

export default function Page() { const [component, setComponent] = useState<React.ReactNode>();

return ( <div> <form onSubmit={async e => { e.preventDefault(); setComponent(await streamComponent()); }} > <button>Stream Component</button> </form> <div>{component}</div> </div> );}To see AI SDK RSC in action, check out our open-source Next.js Gemini Chatbot.

Migrate from OpenAI

One of the key advantages of the AI SDK is its unified API, which makes it incredibly easy to switch between different AI models and providers. This flexibility is particularly useful when you want to migrate from one model to another, such as moving from OpenAI's GPT models to Meta's Llama models hosted on DeepInfra.

Here's how simple the migration process can be:

OpenAI Example:

import { generateText } from 'ai';import { openai } from '@ai-sdk/openai';

const { text } = await generateText({ model: openai('gpt-4-turbo'), prompt: 'What is love?',});Llama on DeepInfra Example:

import { generateText } from 'ai';import { deepinfra } from '@ai-sdk/deepinfra';

const { text } = await generateText({ model: deepinfra('meta-llama/Meta-Llama-3.1-70B-Instruct'), prompt: 'What is love?',});Thanks to the unified API, the core structure of the code remains the same. The main differences are:

- Creating a DeepInfra client

- Changing the model name from

openai("gpt-4-turbo")todeepinfra("meta-llama/Meta-Llama-3.1-70B-Instruct").

With just these few changes, you've migrated from using OpenAI's GPT-4-Turbo to Meta's Llama 3.1 hosted on DeepInfra. The generateText function and its usage remain identical, showcasing the power of the AI SDK's unified API.

This feature allows you to easily experiment with different models, compare their performance, and choose the best one for your specific use case without having to rewrite large portions of your codebase.

Prompt Engineering and Fine-tuning

While the Llama 3.1 family of models are powerful out-of-the-box, their performance can be enhanced through effective prompt engineering and fine-tuning techniques.

Prompt Engineering

Prompt engineering is the practice of crafting input prompts to elicit desired outputs from language models. It involves structuring and phrasing prompts in ways that guide the model towards producing more accurate, relevant, and coherent responses.

For more information on prompt engineering techniques (specific to Llama models), check out these resources:

Fine-tuning

Fine-tuning involves further training a pre-trained model on a specific dataset or task to customize its performance for particular use cases. This process allows you to adapt Llama 3.1 to your specific domain or application, potentially improving its accuracy and relevance for your needs.

To learn more about fine-tuning Llama models, check out these resources:

- Official Fine-tuning Llama Guide

- Fine-tuning and Inference with Llama 3

- Fine-tuning Models with Fireworks AI

- Fine-tuning Llama with Modal

Conclusion

The AI SDK offers a powerful and flexible way to integrate cutting-edge AI models like Llama 3.1 into your applications. With AI SDK Core, you can seamlessly switch between different AI models and providers by changing just two lines of code. This flexibility allows for quick experimentation and adaptation, reducing the time required to change models from days to minutes.

The AI SDK ensures that your application remains clean and modular, accelerating development and future-proofing against the rapidly evolving landscape.

Ready to get started? Here's how you can dive in:

- Explore the documentation at sdk.vercel.ai/docs to understand the full capabilities of the AI SDK.

- Check out practical examples at sdk.vercel.ai/examples to see the SDK in action and get inspired for your own projects.

- Dive deeper with advanced guides on topics like Retrieval-Augmented Generation (RAG) and multi-modal chat at sdk.vercel.ai/docs/guides.

- Check out ready-to-deploy AI templates at vercel.com/templates?type=ai.